mongodb 数据库 linux系统下集群搭建过程

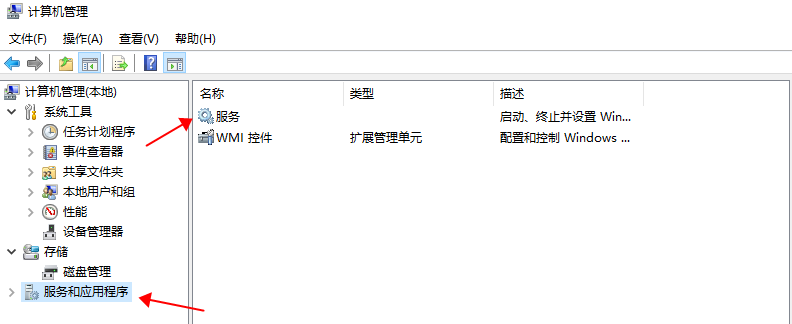

mongodb的集群结构如上图

网上有个mongo3.0的集群例子:

用openssl生成一个key,用于mongo集群内部数据通迅

mongod配置为件(config与shard通用)

mongo_node.conf:

engine: wiredTiger

directoryPerDB: true

journal:

enabled: true

systemLog:

destination: file

logAppend: true

operationProfiling:

slowOpThresholdMs: 10000

replication:

oplogSizeMB: 10240

processManagement:

fork: true

security:

authorization: “disabled”

mongos的配置文件(即图中的route)

mongos.conf:

destination: file

logAppend: true

processManagement:

fork: true

启动config集群(3个mongod进程)

KEYFILE=$WORK_DIR/key/keyfile

cat $KEYFILE

CONFFILE=$WORK_DIR/conf/mongo_node.conf

cat $CONFFILE

MONGOD=mongod

echo $MONGOD

$MONGOD –port 26001 –bind_ip_all –configsvr –replSet configReplSet –keyFile $KEYFILE –dbpath $WORK_DIR/config_cluster/conf_n1/data –pidfilepath $WORK_DIR/config_cluster/conf_n1/db.pid –logpath $WORK_DIR/config_cluster/conf_n1/db.log –config $CONFFILE

$MONGOD –port 26002 –bind_ip_all –configsvr –replSet configReplSet –keyFile $KEYFILE –dbpath $WORK_DIR/config_cluster/conf_n2/data –pidfilepath $WORK_DIR/config_cluster/conf_n2/db.pid –logpath $WORK_DIR/config_cluster/conf_n2/db.log –config $CONFFILE

$MONGOD –port 26003 –bind_ip_all –configsvr –replSet configReplSet –keyFile $KEYFILE –dbpath $WORK_DIR/config_cluster/conf_n3/data –pidfilepath $WORK_DIR/config_cluster/conf_n3/db.pid –logpath $WORK_DIR/config_cluster/conf_n3/db.log –config $CONFFILE

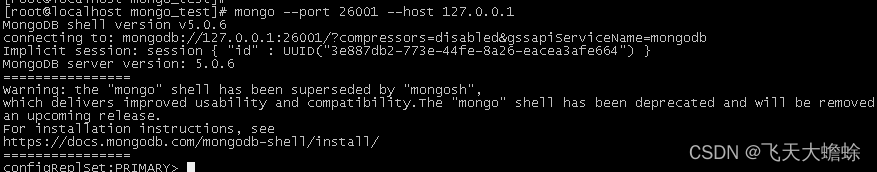

启动成功后

用命令mongo –port 26001 –host 127.0.0.1

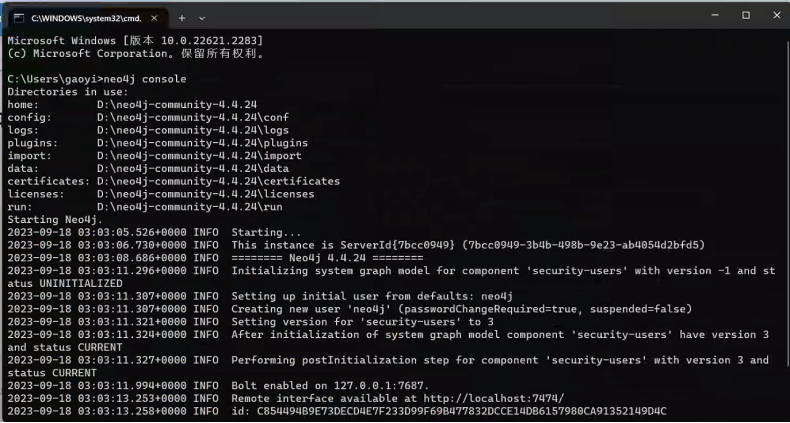

如下图,进入mongo的shell

在shell中输入如下js代码 设置config集群

_id:”configReplSet”,

configsvr: true,

members:[

{_id:0, host:’127.0.0.1:26001′},

{_id:1, host:’127.0.0.1:26002′},

{_id:2, host:’127.0.0.1:26003′}

]};

rs.initiate(cfg);

三个config mongo进程会自动选出一个primary,过一会再进入shell就会发现 shell提示变成primary

顺便给config添加一个admin用户,(一个集群只要在primary进程添加一次,会自动同步给secondary)

db.createUser({

user:’admin’,pwd:’123456′,

roles:[

{role:’clusterAdmin’,db:’admin’},

{role:’userAdminAnyDatabase’,db:’admin’},

{role:’dbAdminAnyDatabase’,db:’admin’},

{role:’readWriteAnyDatabase’,db:’admin’}

]})

同样之后shard也做同样的添加用户操作,便于后继观察数据

启动shard

KEYFILE=$WORK_DIR/key/keyfile

cat $KEYFILE

CONFFILE=$WORK_DIR/conf/mongo_node.conf

cat $CONFFILE

MONGOD=mongod

echo $MONGOD

echo “start shard1 replicaset”

$MONGOD –port 27001 –bind_ip_all –shardsvr –replSet shard1 –keyFile $KEYFILE –dbpath $WORK_DIR/shard1/sh1_n1/data –pidfilepath $WORK_DIR/shard1/sh1_n1/db.pid –logpath $WORK_DIR/shard1/sh1_n1/db.log –config $CONFFILE

$MONGOD –port 27002 –bind_ip_all –shardsvr –replSet shard1 –keyFile $KEYFILE –dbpath $WORK_DIR/shard1/sh1_n2/data –pidfilepath $WORK_DIR/shard1/sh1_n2/db.pid –logpath $WORK_DIR/shard1/sh1_n2/db.log –config $CONFFILE

$MONGOD –port 27003 –bind_ip_all –shardsvr –replSet shard1 –keyFile $KEYFILE –dbpath $WORK_DIR/shard1/sh1_n3/data –pidfilepath $WORK_DIR/shard1/sh1_n3/db.pid –logpath $WORK_DIR/shard1/sh1_n3/db.log –config $CONFFILE

用mongo –port 27001 –host 127.0.0.1进入mongo shell

_id:”shard1″,

members:[

{_id:0, host:’127.0.0.1:27001′},

{_id:1, host:’127.0.0.1:27002′},

{_id:2, host:’127.0.0.1:27003′}

]};

rs.initiate(cfg);

同样用之前的添加用户的js

并用同样的方法启动shard2集群,用于实验数据分片

对应的目录与分片名改成shard2

启动route

KEYFILE=$WORK_DIR/key/keyfile

cat $KEYFILE

CONFFILE=$WORK_DIR/conf/mongos.conf

cat $CONFFILE

MONGOS=mongos

echo $MONGOS

echo “start mongos route instances”

$MONGOS –port=25001 –bind_ip_all –configdb configReplSet/127.0.0.1:26001,127.0.0.1:26002,127.0.0.1:26003 –keyFile $KEYFILE –pidfilepath $WORK_DIR/route/r_n1/db.pid –logpath $WORK_DIR/route/r_n1/db.log –config $CONFFILE

$MONGOS –port 25002 –bind_ip_all –configdb configReplSet/127.0.0.1:26001,127.0.0.1:26002,127.0.0.1:26003 –keyFile $KEYFILE –pidfilepath $WORK_DIR/route/r_n2/db.pid –logpath $WORK_DIR/route/r_n2/db.log –config $CONFFILE

$MONGOS –port 25003 –bind_ip_all –configdb configReplSet/127.0.0.1:26001,127.0.0.1:26002,127.0.0.1:26003 –keyFile $KEYFILE –pidfilepath $WORK_DIR/route/r_n3/db.pid –logpath $WORK_DIR/route/r_n3/db.log –config $CONFFILE

路由添加分片

用mongo –port 25001 –host 127.0.0.1 -u admin -p 123456进入shell

或者这样也可 mongo mongodb://admin:123456@127.0.0.1:25001

在mongo shell分别执行以下两行js

创建一个mongo database与collection

并设置分片

sh.enableSharding(“test”)

db.createCollection(“test_shard”)

sh.shardCollection(“test.test_shard”, {_id:”hashed”}, false, { numInitialChunks: 4} )

在mongo shell用以下js添加数据,可以修改循环次数避免测试时间过长

for(var i=0; i<1000; i++){

var dl = [];

for(var j=0; j<100; j++){

dl.push({

“bookId” : “BBK-” + i + “-” + j,

“type” : “Revision”,

“version” : “IricSoneVB0001”,

“title” : “Jackson’s Life”,

“subCount” : 10,

“location” : “China CN Shenzhen Futian District”,

“author” : {

“name” : 50,

“email” : “RichardFoo@yahoo.com”,

“gender” : “female”

},

“createTime” : new Date()

});

}

cnt += dl.length;

db.test_shard.insertMany(dl);

print(“insert “, cnt);

}

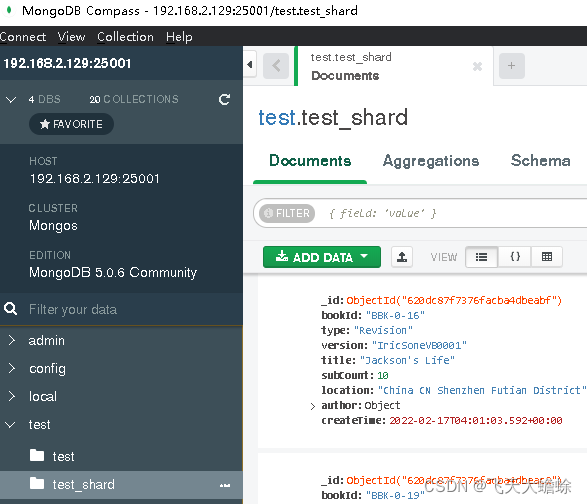

在windows下安装mongodb,利用自带的compass客户端观察两个shard集群

会发现数据分流到两个集群了

也可以直接连route观察数据

补充:

把js存到文件里给shell执行会比较方便

执行js命令如下:

mongo mongodb://admin:123456@127.0.0.1:25001 ./test.js

示例js代码:

conn = new Mongo(“mongodb://admin:123456@192.168.2.129:25001”);

db = conn.getDB(“testjs”)

sh.enableSharding(“testjs”)

db.createCollection(“testjs_col”)

sh.shardCollection(“testjs.testjs_col”, {_id:”hashed”}, false, { numInitialChunks: 4} )

var dl = [];

for(var j=0; j<10; j++){

dl.push({

“bookId” : “BBK-” + 0 + “-” + j,

“type” : “Revision”,

“version” : “IricSoneVB0001”,

“title” : “Jackson’s Life”,

“subCount” : 10,

“location” : “China CN Shenzhen Futian District”,

“author” : {

“name” : 50,

“email” : “RichardFoo@yahoo.com”,

“gender” : “female”

},

“createTime” : new Date()

});

}

db.testjs_col.insertMany(dl);

cursor = db.testjs_col.find();

printjson(cursor.toArray());

到此这篇关于mongodb linux下集群搭建的文章就介绍到这了,更多相关mongodb集群搭建内容请搜索以前的文章或继续浏览下面的相关文章希望大家以后多多支持!